Software's Impact on Productivity

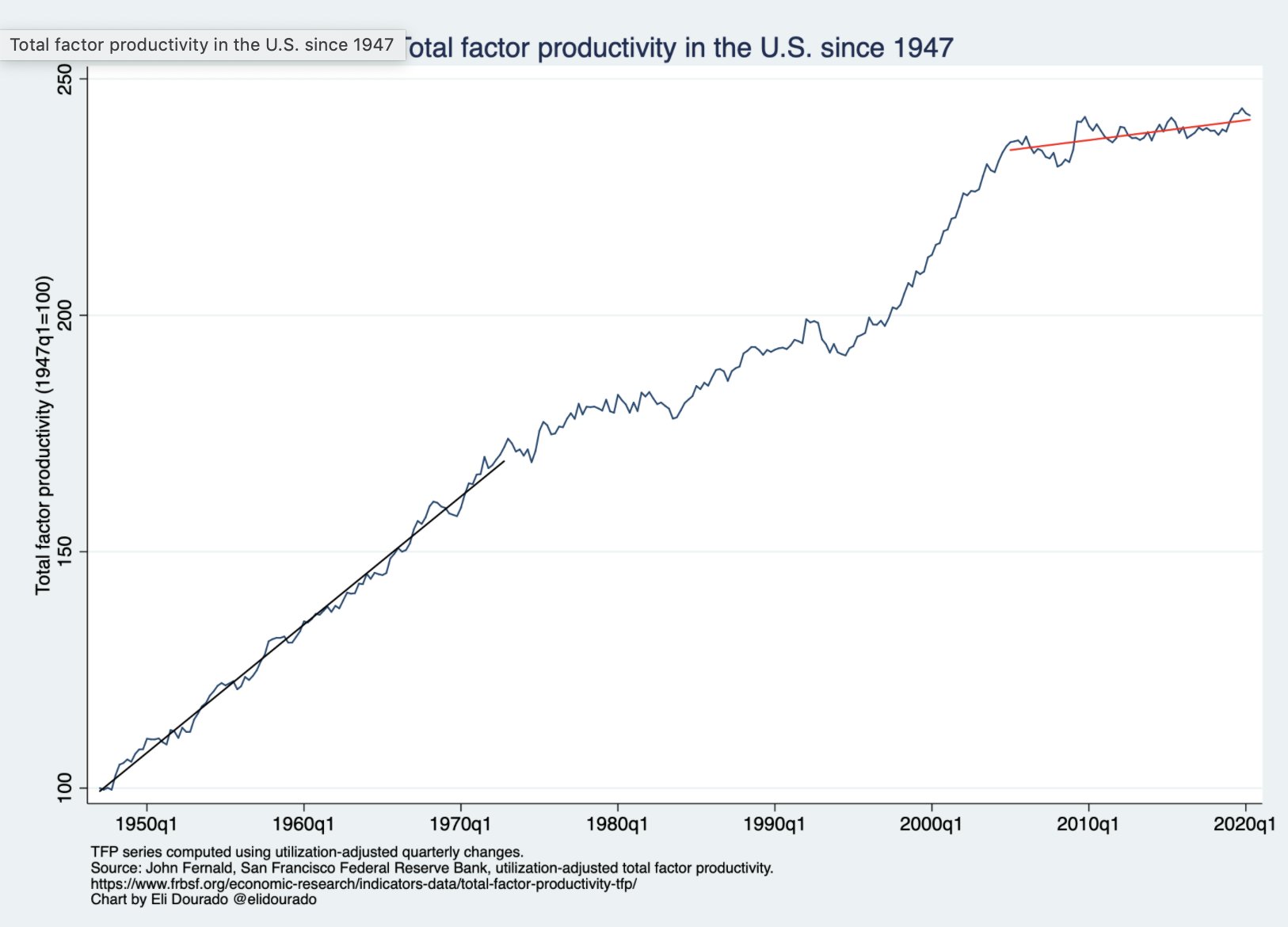

Productivity growth has not been as fast as proponents of the "digital revolution" expected. Besides a short burst of higher productivity growth in the late 1990s and early 2000s (the higher slope portion), it is hard to see the impact.

Source: Data - John Fernald, Chart - Eli Dourado

Economists like Robert Gordon and Tyler Cowen have detailed how productivity growth fell post-1970, but it is less understood why software hasn't picked up the slack.

General-Purpose Technologies versus Management Technologies

General-purpose technology (GPT) is the name economists use for technologies that create broad-based productivity growth. Regular people know them by names like electricity, steel, cars, gasoline, railroads, steam engines, etc. GPTs tend to pack complex technology into a product that is easy for other producers to implement into their products. A new range of possibilities emerges. This asymmetry allows a few talented people to create a product that a wide range of producers can easily incorporate into their businesses.

Management techniques are a technology in a broad sense of the word. Assembly lines are one prominent example. Management techniques are fiendishly difficult to adopt. Improvements in management manifest as differences in company productivity that force most competitors to bankruptcy or merger, if competition allows.[1] Good management and scale are closely linked. Ford reduced costs with assembly lines, increasing sales, which funded more specialized assembly lines and equipment. Thousands of automakers have existed in the United States. Only a few were able to adopt assembly line techniques and compete.

GPTs spread like wildfire on account of their asymmetry. The spread of management technology is a slow, plodding process as the leaders slowly grind down their competitors. Society does not see the benefits of new management techniques until the companies employing them have scaled and absorbed significant market share.

Software is (Mostly) a Management Technology

A computer sure looks like a GPT. It is magic technology that almost anyone can use to message Grandma or learn incredible amounts of information. A closer look shows that the GPT aspects are shallow. For software to increase TFP, it has to mimic human tasks in great detail. Writing software starts like implementing any management system - defining and reimagining processes.

Tell me How to Make a PB&J

There is a classic game that teachers and camp counselors play with their students. The game is that the teacher acts oblivious, and the students have to give the teacher precise instructions on how to make a peanut butter and jelly sandwich. Hilarity ensues. MIT robotics gives this example from a teacher lesson plan.[2]

- Take a slice of bread

- Put peanut butter on the slice

- Take a second slice of bread

- Put jelly on that slice

- Press the slices of bread together

"would result in you taking a slice of bread, putting the jar of peanut butter on top of the slice, taking a second slice of bread, putting the jar of jelly on top of that slice, then picking up both slices of bread and pushing them together. After this, tell the students that their peanut butter and jelly sandwich doesn’t seem quite right and ask for a new set of instructions."

The ideal instruction set is much more complex than you would naturally tell another human:

- Take a slice of bread

- Open the jar of peanut butter by twisting the lid counter clockwise

- Pick up a knife by the handle

- Insert the knife into the jar of peanut butter

- Withdraw the knife from the jar of peanut butter and run it across the slice of bread

- Take a second slice of bread

- Repeat steps 2-5 with the second slice of bread and the jar of jelly.

- Press the two slices of bread together such that the peanut butter and jelly meet

The gap between these instructions is a form of tacit knowledge. Humans acquire tacit knowledge through watching or practice. Computers require even more exacting instructions than our acceptable PB&J answer because they have zero tacit knowledge. Every command ends up as ones and zeros. A humbling part of writing software is that the computer does exactly what you tell it to do.

Diseconomies of Scale Hit Early

Because computers lack tacit knowledge, instructions must have bit-level detail. A supervisor can't walk up to a computer, show it how to attach a fastener, then walk away. A program has to be written by a software engineer that details every actuator movement in sub-millimeter detail.

The world is an absurdly complex place. Few processes are the same at the bit level. There is a choice between writing optimal, custom software or sub-optimal software that covers wider use but does not do everything you need.

There is a good reason why one cardinal piece of advice for software startups is to focus on a tiny sliver of the market and make good software for that process before trying to tackle more products.

Managing Complexity

Software engineers improve the ability of software to absorb more of our complex world. The implementation at the company level is not as simple as it seems.

Bottlenecks and Silos

A company contains many processes that create value for its customers. Management technology determines how good the company is at organizing its resources to produce value.

The Pullman Company in 1916 provides a good example. Pullman had a sprawling factory with 35 departments organized to produce rail cars. Many departments were dependent on the brass works, where seven managers and 350 workers cast brass parts. The brass department was a mess - orders came in from all over the plant, and the harried managers struggled to organize the production to fill them. Workers from other departments would come and bother line workers until they got a specific part they were waiting on.

The solution was to force every other department to submit elaborate forms detailing the parts they needed. The seven managers grew to an army of 47 clerks that processed the paperwork to determine material needs and create optimized production schedules. Performance at the brass works improved and lowered the cost of a new rail car enough to offset the cost of the extra employees many times.[3]

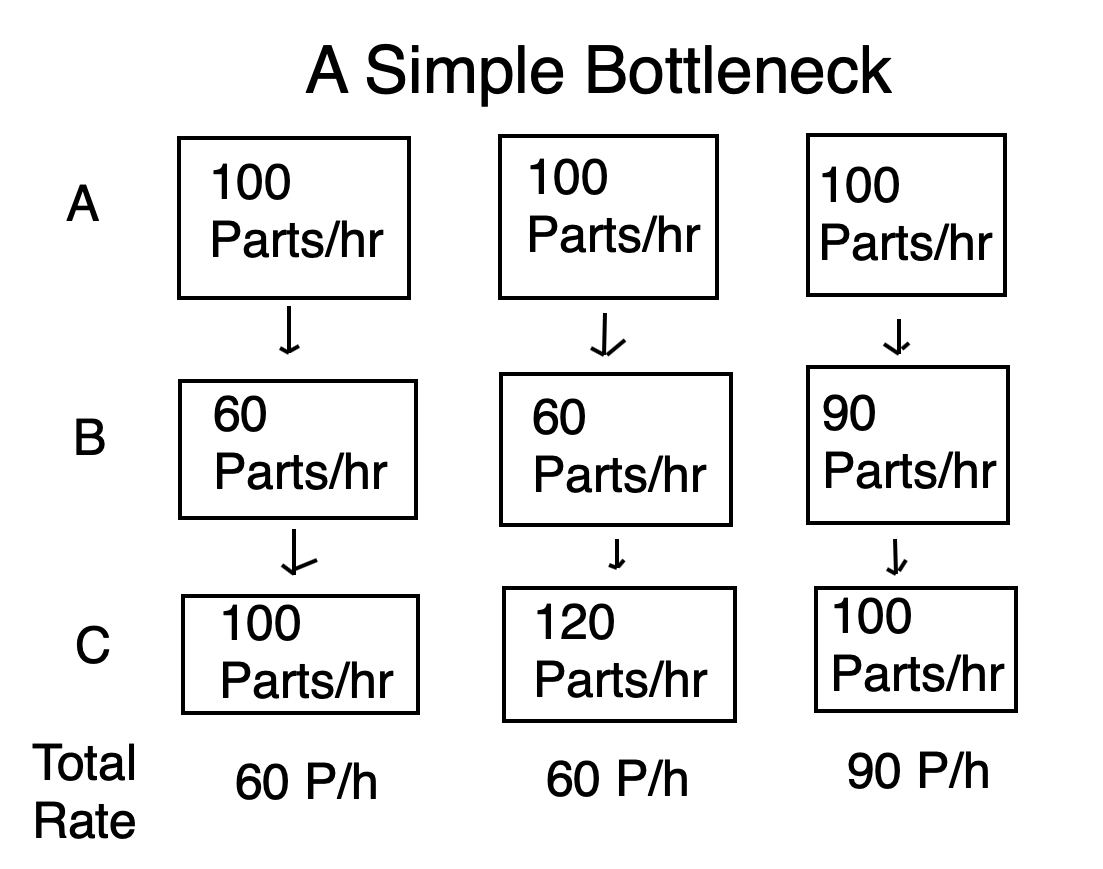

If you aren't familiar with systems thinking, it may seem unusual that adding a bunch of employees and paperwork decreased costs. The simplistic answer is that the brass works was a bottleneck to the entire production process.

In the process above, Step B is our bottleneck, like the brass works. The total output of the process is limited by how many parts per hour Step B can handle. Even if Step C convinces management to buy it a fancy new machine, it won't increase the output of the process. Step B has to be improved to increase output. Increasing Step B's capacity improves profitability because Steps A and C had extra capacity that carries fixed costs. Increased throughput outweighs cost increases from Step B. Steps A and C did not see significant cost increases. They used a higher percentage of their capacity.

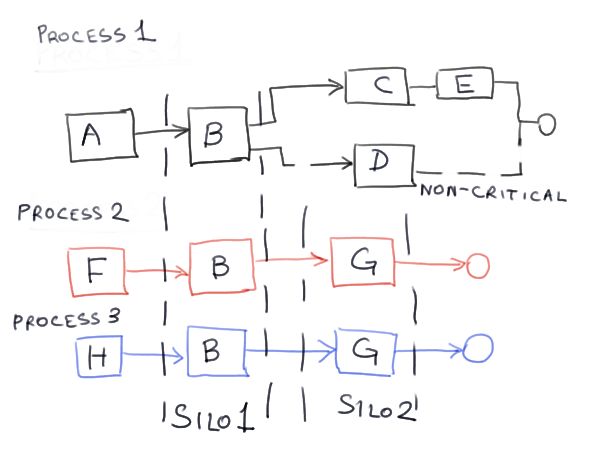

Organizations are much more complex than a three-step process. Venkatesh Rao describes a more complicated system where some departments within the company become "silos" that house critical technology and human resources.[4] Instead of an individual step as the bottleneck, it is a department enmeshed in many processes, like the brass works. These silos are also likely to be a core competency and comparative advantage.

Source: ribbonfarm.com

Software startups often target applications that many companies share - accounting, human resources, communications, etc. Companies want to digitize by purchasing off-the-shelf software. No one creates software for processes that underly their unique competitive advantages. They buy excess capacity in departments that aren't their core business instead. Silos also tend to use pidgin languages and force employees in non-critical departments to learn them. Completing a complicated form if you need parts from the brass works is an example. Generic software might not mix well with the company's core processes. Because the software added to excess capacity, the only way to earn a return is by layoffs. Companies are laying off workers that understand how to interface with their unique processes!

Agile Thinking

In the dark old days, the software writing process started by building a long list of requirements from stakeholders and assembling them into Gantt charts that spanned hallways. Software engineers disappeared into a dark hole for months to write a monolithic program that met the requirements. Just like the PB&J exercise, the results were subpar. Complexity means requirements are always wrong or suboptimal. Software teams get handed the project of the brass works while it is the ad hoc mess with seven managers. Not the perfectly systemized process with 47 clerks and every procedure detailed.

A group of software engineers realized that better ways of organizing software development existed. Through a combination of deduction and research, they found systems-based management techniques like Toyota Production System (TPS) break extremely complex process flows into small chunks and produce outputs with very low variation. The group adopted many principles from their own experience, TPS, lean, and other systems to create the idea of Agile Software Development.

If you give an Agile software team requirements for the software you need, they automatically assume those requirements are wrong. Instead of building what you ask, they take a small piece and hack together a prototype and then get your feedback. Then they do it again and again. The team continuously improves the software based on your needs that are difficult to communicate.

Agile methods are a reason why software-driven challengers can fell even the most competent incumbents. Digitizing a process requires the challengers to know a business in more detail than the incumbents, and the challengers use systems that thrive with poor starting knowledge. Incumbents have to adopt systems thinking and learn how to write good software to compete.

Eliminate Communication

Jeff Bezos famously advocates for less communication between his employees and departments. If we go back to the Pullman brass works, slipping a form through a slot is less communication than walking around and bothering managers and line workers to make a part. Wal-Mart discovered this lesson when their inventory and distribution system became too complicated for them to manage. They instead built a system that allowed their suppliers to see their stock at stores and warehouses with minimal communication between Wal-Mart employees and suppliers.

Amazon and other modern software teams create code in small chunks to limit communication, increase maintainability, and improve clarity. They set rules on how to talk to each piece of code. Application programming interfaces (APIs, a standard for machine-to-machine communication), micro-services, and functions are all versions of this concept. These strategies are like decentralizing the code base, allowing increased complexity while decreasing fragility.

Abstraction and Reuse Increases Code Productivity

Software engineers have techniques to allow code reuse and handle more complexity with less code. In our correct PB&J example, note how instruction seven says to repeat instructions two through five, an example of code reuse.

Abstraction is when a high-level program uses many small chunks of code to accomplish a task, avoiding the underlying complexity. Software libraries, both open and proprietary, facilitate reuse and abstraction. As the effort required to create code decreases, writing software for smaller-scale processes is possible.

Vertical Integration is a Dominant Strategy

Real-world complexity gives us universal software with a low level of capability, like email. It encourages software providers to provide misfit tools for only a portion of our workflow. It is almost impossible for a third-party software provider to reorganize an industry's core processes. If they did, they would be a first-party company. We want an assembly line, but we get a mallet and file subscription instead. To have an impact on TFP, we need assembly lines.

Investor Keith Rabois has the recipe for building assembly lines pinned to his Twitter account:

"Formula for startup success: Find large highly fragmented industry w low NPS; vertically integrate a solution to simplify value product."

NPS stands for Net Promoter Score. It is a measure of how much a customer loves a service. An example of a low NPS industry is car sales.

A vertically integrated company with a large potential market can justify building an assembly line. Amazon, Stripe, and Tesla are some of the best examples.

The Nature of The Firm

According to economic theory pre-1937, it was not obvious why companies existed. Economists thought markets and prices organized the economy, yet companies with internal central planning were the bedrock of the economy. Ronald Coase realized companies (or "firms") existed because transactions and contracts taking place on the open market had costs in time, money, and uncertainty.[5] If a company could be organized and directed by a competent manager, transactions within the firm could be cheaper than open market transactions. The company would grow until its internal transactions cost more than external transactions. Larger firms result when:

- "the less the costs of organising and the slower these costs rise with an increase in the transactions organised."

- "the less likely the entrepreneur is to make mistakes and the smaller the increase in mistakes with an increase in the transactions organised."

- the greater the lowering (or the less the rise) in the supply price of factors of production to firms of larger size.

Software Drives Scale, Scale Justifies Investment

A precise technology that helps monitor operations and has low scaling costs will increase company size. Software fits the bill. We've solved the "find a fragmented industry" part of Rabois's tweet. Software increased the ideal company size. But why does it need to be a big industry? There are several possible reasons, but a critical one is that software has high upfront costs. Mapping complex transactions and processes take a lot of time and money. John Collison, a co-founder of Stripe, explains in a podcast:

When we started, a lot of people told us that payments is a scale business. You'll never make it son. And only the very large companies can survive. We were like, No, you don't realize things are different." Now, that we've gotten a chance to actually become familiar in our operating. We're like, "Wow, this really is a scale business." In that, as you look at what's required in operating, payments is a business where you make literally pennies on a per transaction basis and you have to have an enormous number of them to actually be able to operate with any modicum of profitability. And you would not believe, I mean, it's fairly obvious that it's a fixed cost business and then you need to get enough business flowing through you to make the economics work. I think what's interesting is as things have moved online, the fixed costs have gone way up compared to what was needed to run a domestic only payments business.

If we think about just again, going back to your "invest like the best premium model" where you got access to exclusive content, for smart people, the Patrick interviews are only available on the paid product. And so as we think about that business, and again, just Stripe to do to unlock the payment system for that. We have engineers who are based in Singapore. They have built custom integrations with the local Malaysian bank transfer system. And they actually are now friends with the people, the engineers at the local Malaysian bank transfer system, because it's still work in flies itself. And so they're kind of working with them on some of the functionality that's needed. And so that way, if you have someone, a listener who's from Malaysia, they can pay the way that they're used to doing so, not just with a credit card, but with a bank account in Malaysia. Stripe has engineers in Ireland who are similarly a French local card switch is actually different to visa Mastercards and you need to be able to support that, to be able to properly serve French customers.

And so we've just been shocked, the degree to which, if you want to be able to reach every global customer, there really are very large economies of scale with that.

Without a large industry and the promise of scale, it is hard to justify investment in digitizing an entire process. The world is too complex! The high upfront costs contribute to slow market share growth. Each incremental customer requires increasing amounts of effort to build the service they need. Because the software is so custom, it is near impossible to integrate across different companies in a value chain. Remember, we need 1s and 0s accuracy! Imagine the entire real estate industry (including agents, financiers, inspectors) all agreeing on standards that have detail at the bit level. Now you know why we have iBuyers instead. Current software reduces the costs within firms more than it reduces the cost of market transactions.

Online-only businesses have much more control of the variability their software sees. The real world is not so kind. Companies like Amazon and Tesla have to write more complex software and build the physical infrastructure to match their software. These companies go through long periods of negative or breakeven cash flow as they grow. Only once they reach a significant scale do they produce high margins. Even if incumbents learn how to define their processes at the bit level, they face a deficit in written software capital. The precision required means copy and paste does not work.

Dominant, vertically integrated companies succeed by digitizing the core silos that bottleneck the system. Like Pullman, profits increase through fixed cost absorption.

Competition

If incumbents put up little competition for the expanding behemoths, will we end up with monopolies? No, competition will come as the behemoths compete with each other. Coase predicts this in his paper. He notes that as a firm expands selling Product A, diminishing returns may mean that Product B becomes a better target for expansion. The model of increasing effort for each new customer fits this nicely. Amazon sells cloud computing services and ads besides books. Google sells search ads and cloud computing while also trying its hand at self-driving taxis. Apple is making a car besides selling its computing hardware and services.

Implications for Productivity

The current status quo means we don't get productivity growth until these software-driven companies become behemoths. Amazon was founded in 1994, almost thirty years ago. In 2020, it was still less than 10% of total retail sales. Is it any wonder that we haven't seen robust productivity gains? Amazon is still mapping and digitizing processes at prodigious rates. A tech boot camp, Lambda School, has a custom program to train engineers for Amazon. Lambda CEO Austen Allred weighs in:

"The demand from employers for Lambda School's coming Backend Development track is the most intense demand I've ever seen for anything we've ever done. By a lot. Giant companies, hiring like crazy, need a lot of backend help

It makes sense: Imagine you're a big company (we built the curriculum with Amazon for a reason) with nearly infinite demand. Scaling those systems, building APIs, and integrations... there's an infinite amount of work."

In many industries, assembly lines builders are just getting started. If these companies maintain rapid growth as their market share increases, TFP should finally show some life. Increasing complexity for serving the remaining customers may increase the required code and process mapping where we get a drawn-out, slow transition instead of a boost.

Looking for Software GPTs

To avoid that fate, we need more software GPTs besides email, messaging, and content. Or our software engineers have to increase productivity faster than complexity increases.

A.I.

Almost every recent A.I. advance has come from one tiny corner of the field, machine learning. Machine learning exposes a set of connected nodes, known as neural nets, to mass amounts of labeled real-world data in an attempt to give those nodes tacit knowledge. The breakthrough example was software that was able to identify cat pictures.

So far, these neural nets have given us some great demos but mostly niche real-world applications. We don't have self-driving cars quite yet! The reason they have not produced breakthrough results is because of the complexity in the world. Neural nets are stupid, literally. If they haven't seen something in the training data, they don't know what to do. Breakthrough applications will require a mind-boggling amount of perfectly labeled training data. Most companies still have people labeling data by hand! Leaders in the field are building tools to reduce the labor content of the labeling process. Machine learning software will probably increase company size because collecting real-world data has a higher upfront cost than many existing software projects.

Human brains are also neural nets, proving that the ceiling is high. If there were breakthroughs in neural nets that reduced the training data requirements, that could make more applications feasible. The increase in neural net complexity would still have to outrun the increasing complexity of applications.

No Code Software

No code or low code software attempts to allow non-technical users to code. Some applications, like spreadsheets and website design, have been successful.

A growing category of no code is Robotic Process Automation (RPA). Moving some process mapping to those that hold the knowledge avoids having to teach it to software engineers. Workers can handle problems themselves without having to justify the project to their boss.

There are limits to no code. No code can hurt instead of help the bottom line if the employee already has excess capacity. Building user interfaces requires a lot of code and limits the underlying functionality. For no code to impact top-level TFP, it would require a pairing with neural nets that interface with users and write the underlying code. Companies are trying this concept. The jury is still out if it will be a software engineer productivity tool only or eventually allow a non-technical person to write code.

Blockchains and Open State Software

Open State, decentralized blockchains take the ideas behind APIs and micro-services and turbocharges them. Open state means the current data related to a process is visible publicly or to anyone with a key. Wal-Mart made portions of their systems open state to help their sellers manage inventory. And Amazon uses open state systems and APIs to help its sellers. Amazon also has departments that sell internally developed services to outside customers (AWS) using similar APIs and dashboards.

Public blockchains make systems open state and include APIs by default. It could reduce the communication and coordination that vendors, departments, customers, and other stakeholders need to conduct transactions. A blockchain transaction might be more expensive than a database transaction, but it is dramatically cheaper than many market transactions. The unit of organization can be pushed much smaller by allowing more transactions to be market-oriented.

Reducing the cost of transactions could allow networks of smaller organizations to be competitive with our behemoths. But it may not necessarily lead to smaller companies. Coase notes that while firms emerged organically, governments have found it convenient to organize regulations and taxes around firms, increasing firm size. Blockchains lower transaction costs for both internal and external market-like transactions. External transactions have a fixed cost component from government intervention, favoring internal market transactions, increasing the ideal firm size. The result is more market-oriented transactions, either way. Reducing transaction costs and other promised benefits like improving capital allocation extend the software as a management technology paradigm.

Technology Research

After decades of stagnation, progress has improved in fusion, thermoelectric materials, battery chemistry, biology, and room-temperature semiconductors. Better computing has played a part by increasing the number of experiments a researcher can do and better modeling what ideas might work.

Quantum Computing

Our world is quantum. Unfortunately, our current computing paradigms struggle to compute the complexity around us. Quantum computers would give us better models. While these computers could solve many optimization problems, their most profound uses will be in areas like materials and biology. Finding ultra-strong alloys or understanding nuclear reactions better will have more impact than typical software processes.

Conclusion

Using software to operate our economic processes means putting huge portions of complex human life into code. While software improves through better tooling and faster hardware, the problems left to solve have steeply increasing complexity to reward ratios.

GPTs can flatten complexity. A strong, cheap structural material eliminates the need for complicated designs in new applications. Inexpensive energy sources reduce the need to increase efficiency. Brute force can prevail. GPTs are more likely to come from energy, transportation, biology, or materials technologies. Since software is a multiplier, any increase in input quality or quantity is even more beneficial. Software is more impactful in a universe with 1 trillion humans than one with 10 billion humans.

Software rearranges inputs to be more efficient. It will improve the quality of life. To guarantee significant advances, we need better inputs and more of them.

-

Management as a Technology?

-

MIT Robotics PB&J Lesson

-

Pullman Articles; System, Magazine of Business!.

-

The Silo Reconsidered, Ribbonfarm Blog.

-

The Nature of the Firm

-

Invest like the Best Podcast, John Collison episode

Why Doesn't Software Show Up in Productivity?

2021 August 10 Twitter Substack See all postsSoftware is actually a management technology.

Software's Impact on Productivity

Productivity growth has not been as fast as proponents of the "digital revolution" expected. Besides a short burst of higher productivity growth in the late 1990s and early 2000s (the higher slope portion), it is hard to see the impact.

Source: Data - John Fernald, Chart - Eli Dourado

Economists like Robert Gordon and Tyler Cowen have detailed how productivity growth fell post-1970, but it is less understood why software hasn't picked up the slack.

General-Purpose Technologies versus Management Technologies

General-purpose technology (GPT) is the name economists use for technologies that create broad-based productivity growth. Regular people know them by names like electricity, steel, cars, gasoline, railroads, steam engines, etc. GPTs tend to pack complex technology into a product that is easy for other producers to implement into their products. A new range of possibilities emerges. This asymmetry allows a few talented people to create a product that a wide range of producers can easily incorporate into their businesses.

Management techniques are a technology in a broad sense of the word. Assembly lines are one prominent example. Management techniques are fiendishly difficult to adopt. Improvements in management manifest as differences in company productivity that force most competitors to bankruptcy or merger, if competition allows.[1] Good management and scale are closely linked. Ford reduced costs with assembly lines, increasing sales, which funded more specialized assembly lines and equipment. Thousands of automakers have existed in the United States. Only a few were able to adopt assembly line techniques and compete.

GPTs spread like wildfire on account of their asymmetry. The spread of management technology is a slow, plodding process as the leaders slowly grind down their competitors. Society does not see the benefits of new management techniques until the companies employing them have scaled and absorbed significant market share.

Software is (Mostly) a Management Technology

A computer sure looks like a GPT. It is magic technology that almost anyone can use to message Grandma or learn incredible amounts of information. A closer look shows that the GPT aspects are shallow. For software to increase TFP, it has to mimic human tasks in great detail. Writing software starts like implementing any management system - defining and reimagining processes.

Tell me How to Make a PB&J

There is a classic game that teachers and camp counselors play with their students. The game is that the teacher acts oblivious, and the students have to give the teacher precise instructions on how to make a peanut butter and jelly sandwich. Hilarity ensues. MIT robotics gives this example from a teacher lesson plan.[2]

The ideal instruction set is much more complex than you would naturally tell another human:

The gap between these instructions is a form of tacit knowledge. Humans acquire tacit knowledge through watching or practice. Computers require even more exacting instructions than our acceptable PB&J answer because they have zero tacit knowledge. Every command ends up as ones and zeros. A humbling part of writing software is that the computer does exactly what you tell it to do.

Diseconomies of Scale Hit Early

Because computers lack tacit knowledge, instructions must have bit-level detail. A supervisor can't walk up to a computer, show it how to attach a fastener, then walk away. A program has to be written by a software engineer that details every actuator movement in sub-millimeter detail.

The world is an absurdly complex place. Few processes are the same at the bit level. There is a choice between writing optimal, custom software or sub-optimal software that covers wider use but does not do everything you need.

There is a good reason why one cardinal piece of advice for software startups is to focus on a tiny sliver of the market and make good software for that process before trying to tackle more products.

Managing Complexity

Software engineers improve the ability of software to absorb more of our complex world. The implementation at the company level is not as simple as it seems.

Bottlenecks and Silos

A company contains many processes that create value for its customers. Management technology determines how good the company is at organizing its resources to produce value.

The Pullman Company in 1916 provides a good example. Pullman had a sprawling factory with 35 departments organized to produce rail cars. Many departments were dependent on the brass works, where seven managers and 350 workers cast brass parts. The brass department was a mess - orders came in from all over the plant, and the harried managers struggled to organize the production to fill them. Workers from other departments would come and bother line workers until they got a specific part they were waiting on.

The solution was to force every other department to submit elaborate forms detailing the parts they needed. The seven managers grew to an army of 47 clerks that processed the paperwork to determine material needs and create optimized production schedules. Performance at the brass works improved and lowered the cost of a new rail car enough to offset the cost of the extra employees many times.[3]

If you aren't familiar with systems thinking, it may seem unusual that adding a bunch of employees and paperwork decreased costs. The simplistic answer is that the brass works was a bottleneck to the entire production process.

In the process above, Step B is our bottleneck, like the brass works. The total output of the process is limited by how many parts per hour Step B can handle. Even if Step C convinces management to buy it a fancy new machine, it won't increase the output of the process. Step B has to be improved to increase output. Increasing Step B's capacity improves profitability because Steps A and C had extra capacity that carries fixed costs. Increased throughput outweighs cost increases from Step B. Steps A and C did not see significant cost increases. They used a higher percentage of their capacity.

Organizations are much more complex than a three-step process. Venkatesh Rao describes a more complicated system where some departments within the company become "silos" that house critical technology and human resources.[4] Instead of an individual step as the bottleneck, it is a department enmeshed in many processes, like the brass works. These silos are also likely to be a core competency and comparative advantage.

Source: ribbonfarm.com

Software startups often target applications that many companies share - accounting, human resources, communications, etc. Companies want to digitize by purchasing off-the-shelf software. No one creates software for processes that underly their unique competitive advantages. They buy excess capacity in departments that aren't their core business instead. Silos also tend to use pidgin languages and force employees in non-critical departments to learn them. Completing a complicated form if you need parts from the brass works is an example. Generic software might not mix well with the company's core processes. Because the software added to excess capacity, the only way to earn a return is by layoffs. Companies are laying off workers that understand how to interface with their unique processes!

Agile Thinking

In the dark old days, the software writing process started by building a long list of requirements from stakeholders and assembling them into Gantt charts that spanned hallways. Software engineers disappeared into a dark hole for months to write a monolithic program that met the requirements. Just like the PB&J exercise, the results were subpar. Complexity means requirements are always wrong or suboptimal. Software teams get handed the project of the brass works while it is the ad hoc mess with seven managers. Not the perfectly systemized process with 47 clerks and every procedure detailed.

A group of software engineers realized that better ways of organizing software development existed. Through a combination of deduction and research, they found systems-based management techniques like Toyota Production System (TPS) break extremely complex process flows into small chunks and produce outputs with very low variation. The group adopted many principles from their own experience, TPS, lean, and other systems to create the idea of Agile Software Development.

If you give an Agile software team requirements for the software you need, they automatically assume those requirements are wrong. Instead of building what you ask, they take a small piece and hack together a prototype and then get your feedback. Then they do it again and again. The team continuously improves the software based on your needs that are difficult to communicate.

Agile methods are a reason why software-driven challengers can fell even the most competent incumbents. Digitizing a process requires the challengers to know a business in more detail than the incumbents, and the challengers use systems that thrive with poor starting knowledge. Incumbents have to adopt systems thinking and learn how to write good software to compete.

Eliminate Communication

Jeff Bezos famously advocates for less communication between his employees and departments. If we go back to the Pullman brass works, slipping a form through a slot is less communication than walking around and bothering managers and line workers to make a part. Wal-Mart discovered this lesson when their inventory and distribution system became too complicated for them to manage. They instead built a system that allowed their suppliers to see their stock at stores and warehouses with minimal communication between Wal-Mart employees and suppliers.

Amazon and other modern software teams create code in small chunks to limit communication, increase maintainability, and improve clarity. They set rules on how to talk to each piece of code. Application programming interfaces (APIs, a standard for machine-to-machine communication), micro-services, and functions are all versions of this concept. These strategies are like decentralizing the code base, allowing increased complexity while decreasing fragility.

Abstraction and Reuse Increases Code Productivity

Software engineers have techniques to allow code reuse and handle more complexity with less code. In our correct PB&J example, note how instruction seven says to repeat instructions two through five, an example of code reuse.

Abstraction is when a high-level program uses many small chunks of code to accomplish a task, avoiding the underlying complexity. Software libraries, both open and proprietary, facilitate reuse and abstraction. As the effort required to create code decreases, writing software for smaller-scale processes is possible.

Vertical Integration is a Dominant Strategy

Real-world complexity gives us universal software with a low level of capability, like email. It encourages software providers to provide misfit tools for only a portion of our workflow. It is almost impossible for a third-party software provider to reorganize an industry's core processes. If they did, they would be a first-party company. We want an assembly line, but we get a mallet and file subscription instead. To have an impact on TFP, we need assembly lines.

Investor Keith Rabois has the recipe for building assembly lines pinned to his Twitter account:

NPS stands for Net Promoter Score. It is a measure of how much a customer loves a service. An example of a low NPS industry is car sales.

A vertically integrated company with a large potential market can justify building an assembly line. Amazon, Stripe, and Tesla are some of the best examples.

The Nature of The Firm

According to economic theory pre-1937, it was not obvious why companies existed. Economists thought markets and prices organized the economy, yet companies with internal central planning were the bedrock of the economy. Ronald Coase realized companies (or "firms") existed because transactions and contracts taking place on the open market had costs in time, money, and uncertainty.[5] If a company could be organized and directed by a competent manager, transactions within the firm could be cheaper than open market transactions. The company would grow until its internal transactions cost more than external transactions. Larger firms result when:

Software Drives Scale, Scale Justifies Investment

A precise technology that helps monitor operations and has low scaling costs will increase company size. Software fits the bill. We've solved the "find a fragmented industry" part of Rabois's tweet. Software increased the ideal company size. But why does it need to be a big industry? There are several possible reasons, but a critical one is that software has high upfront costs. Mapping complex transactions and processes take a lot of time and money. John Collison, a co-founder of Stripe, explains in a podcast:

Without a large industry and the promise of scale, it is hard to justify investment in digitizing an entire process. The world is too complex! The high upfront costs contribute to slow market share growth. Each incremental customer requires increasing amounts of effort to build the service they need. Because the software is so custom, it is near impossible to integrate across different companies in a value chain. Remember, we need 1s and 0s accuracy! Imagine the entire real estate industry (including agents, financiers, inspectors) all agreeing on standards that have detail at the bit level. Now you know why we have iBuyers instead. Current software reduces the costs within firms more than it reduces the cost of market transactions.

Online-only businesses have much more control of the variability their software sees. The real world is not so kind. Companies like Amazon and Tesla have to write more complex software and build the physical infrastructure to match their software. These companies go through long periods of negative or breakeven cash flow as they grow. Only once they reach a significant scale do they produce high margins. Even if incumbents learn how to define their processes at the bit level, they face a deficit in written software capital. The precision required means copy and paste does not work.

Dominant, vertically integrated companies succeed by digitizing the core silos that bottleneck the system. Like Pullman, profits increase through fixed cost absorption.

Competition

If incumbents put up little competition for the expanding behemoths, will we end up with monopolies? No, competition will come as the behemoths compete with each other. Coase predicts this in his paper. He notes that as a firm expands selling Product A, diminishing returns may mean that Product B becomes a better target for expansion. The model of increasing effort for each new customer fits this nicely. Amazon sells cloud computing services and ads besides books. Google sells search ads and cloud computing while also trying its hand at self-driving taxis. Apple is making a car besides selling its computing hardware and services.

Implications for Productivity

The current status quo means we don't get productivity growth until these software-driven companies become behemoths. Amazon was founded in 1994, almost thirty years ago. In 2020, it was still less than 10% of total retail sales. Is it any wonder that we haven't seen robust productivity gains? Amazon is still mapping and digitizing processes at prodigious rates. A tech boot camp, Lambda School, has a custom program to train engineers for Amazon. Lambda CEO Austen Allred weighs in:

In many industries, assembly lines builders are just getting started. If these companies maintain rapid growth as their market share increases, TFP should finally show some life. Increasing complexity for serving the remaining customers may increase the required code and process mapping where we get a drawn-out, slow transition instead of a boost.

Looking for Software GPTs

To avoid that fate, we need more software GPTs besides email, messaging, and content. Or our software engineers have to increase productivity faster than complexity increases.

A.I.

Almost every recent A.I. advance has come from one tiny corner of the field, machine learning. Machine learning exposes a set of connected nodes, known as neural nets, to mass amounts of labeled real-world data in an attempt to give those nodes tacit knowledge. The breakthrough example was software that was able to identify cat pictures.

So far, these neural nets have given us some great demos but mostly niche real-world applications. We don't have self-driving cars quite yet! The reason they have not produced breakthrough results is because of the complexity in the world. Neural nets are stupid, literally. If they haven't seen something in the training data, they don't know what to do. Breakthrough applications will require a mind-boggling amount of perfectly labeled training data. Most companies still have people labeling data by hand! Leaders in the field are building tools to reduce the labor content of the labeling process. Machine learning software will probably increase company size because collecting real-world data has a higher upfront cost than many existing software projects.

Human brains are also neural nets, proving that the ceiling is high. If there were breakthroughs in neural nets that reduced the training data requirements, that could make more applications feasible. The increase in neural net complexity would still have to outrun the increasing complexity of applications.

No Code Software

No code or low code software attempts to allow non-technical users to code. Some applications, like spreadsheets and website design, have been successful.

A growing category of no code is Robotic Process Automation (RPA). Moving some process mapping to those that hold the knowledge avoids having to teach it to software engineers. Workers can handle problems themselves without having to justify the project to their boss.

There are limits to no code. No code can hurt instead of help the bottom line if the employee already has excess capacity. Building user interfaces requires a lot of code and limits the underlying functionality. For no code to impact top-level TFP, it would require a pairing with neural nets that interface with users and write the underlying code. Companies are trying this concept. The jury is still out if it will be a software engineer productivity tool only or eventually allow a non-technical person to write code.

Blockchains and Open State Software

Open State, decentralized blockchains take the ideas behind APIs and micro-services and turbocharges them. Open state means the current data related to a process is visible publicly or to anyone with a key. Wal-Mart made portions of their systems open state to help their sellers manage inventory. And Amazon uses open state systems and APIs to help its sellers. Amazon also has departments that sell internally developed services to outside customers (AWS) using similar APIs and dashboards.

Public blockchains make systems open state and include APIs by default. It could reduce the communication and coordination that vendors, departments, customers, and other stakeholders need to conduct transactions. A blockchain transaction might be more expensive than a database transaction, but it is dramatically cheaper than many market transactions. The unit of organization can be pushed much smaller by allowing more transactions to be market-oriented.

Reducing the cost of transactions could allow networks of smaller organizations to be competitive with our behemoths. But it may not necessarily lead to smaller companies. Coase notes that while firms emerged organically, governments have found it convenient to organize regulations and taxes around firms, increasing firm size. Blockchains lower transaction costs for both internal and external market-like transactions. External transactions have a fixed cost component from government intervention, favoring internal market transactions, increasing the ideal firm size. The result is more market-oriented transactions, either way. Reducing transaction costs and other promised benefits like improving capital allocation extend the software as a management technology paradigm.

Technology Research

After decades of stagnation, progress has improved in fusion, thermoelectric materials, battery chemistry, biology, and room-temperature semiconductors. Better computing has played a part by increasing the number of experiments a researcher can do and better modeling what ideas might work.

Quantum Computing

Our world is quantum. Unfortunately, our current computing paradigms struggle to compute the complexity around us. Quantum computers would give us better models. While these computers could solve many optimization problems, their most profound uses will be in areas like materials and biology. Finding ultra-strong alloys or understanding nuclear reactions better will have more impact than typical software processes.

Conclusion

Using software to operate our economic processes means putting huge portions of complex human life into code. While software improves through better tooling and faster hardware, the problems left to solve have steeply increasing complexity to reward ratios.

GPTs can flatten complexity. A strong, cheap structural material eliminates the need for complicated designs in new applications. Inexpensive energy sources reduce the need to increase efficiency. Brute force can prevail. GPTs are more likely to come from energy, transportation, biology, or materials technologies. Since software is a multiplier, any increase in input quality or quantity is even more beneficial. Software is more impactful in a universe with 1 trillion humans than one with 10 billion humans.

Software rearranges inputs to be more efficient. It will improve the quality of life. To guarantee significant advances, we need better inputs and more of them.

Management as a Technology?

MIT Robotics PB&J Lesson

Pullman Articles; System, Magazine of Business!.

The Silo Reconsidered, Ribbonfarm Blog.

The Nature of the Firm

Invest like the Best Podcast, John Collison episode